Deep Learning 42 (3) TensorFlow Implementation of Info GAN YouTube

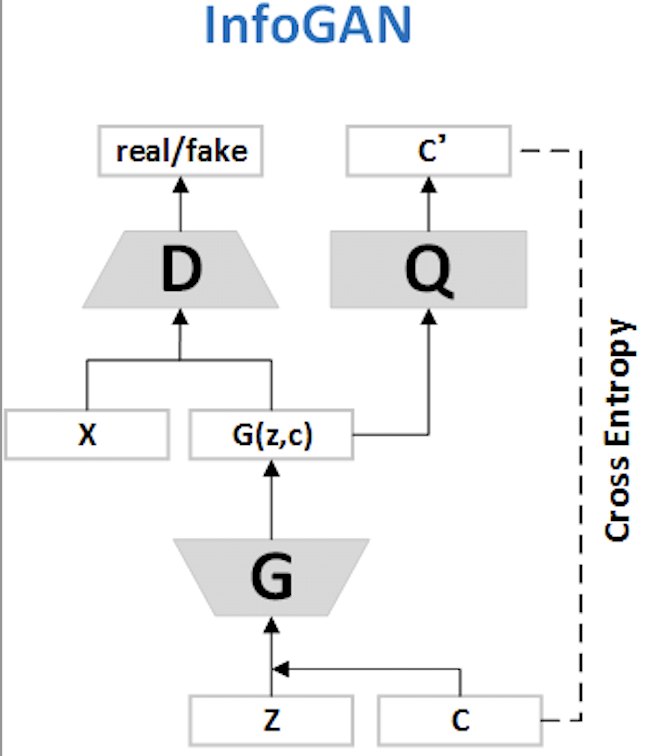

InfoGAN The way InfoGAN approaches this problem is by splitting the Generator input into two parts: the traditional noise vector and a new "latent code" vector. The codes are then made meaningful by maximizing the Mutual Information between the code and the generator output. Theory

Nice Gan Pytorch

Mutual Information. InfoGAN stands for information maximizing GAN. To maximize information, InfoGAN uses mutual information. In information theory, the mutual information between X and Y, I(X; Y ), measures the "amount of information" learned from knowledge of random variable Y about the other random variable X.

Alternatives and detailed information of Nice Gan Pytorch

InfoGAN is a type of generative adversarial network that modifies the GAN objective to encourage it to learn interpretable and meaningful representations. This is done by maximizing the mutual information between a fixed small subset of the GAN's noise variables and the observations.

clowningweeb on Twitter "nice info gan 👍🏻…

1. Introduction. InfoGAN was introduced by Chen et al.[1] in 2016. On top of GAN having a generator and a discriminator, there is an auxiliary network called the Q-network.

Creating Videos with Neural Networks using GAN YouTube

This paper describes InfoGAN, an information-theoretic extension to the Generative Adversarial Network that is able to learn disentangled representations in a completely unsupervised manner. InfoGAN is a generative adversarial network that also maximizes the mutual information between a small subset of the latent variables and the observation. We derive a lower bound to the mutual information.

Spoiler One Piece Chapter 933 Belas Kasih Sang Samurai Nice Info Gan

Cardalonia Pre-Sale is Live (How To Participate) 17.2K. 3.4K

GAN Lecture 7 (2018) Info GAN, VAEGAN, BiGAN YouTube

Generative Adversarial Networks, or GANs, are deep learning architecture generative models that have seen wide success. There are thousands of papers on GANs and many hundreds of named-GANs, that is, models with a defined name that often includes "GAN", such as DCGAN, as opposed to a minor extension to the method.Given the vast size of the GAN literature and number of models, it can be, at.

nice info gan

The Information Maximizing GAN, or InfoGAN for short, is an extension to the GAN architecture that introduces control variables that are automatically learned by the architecture and allow control over the generated image, such as style, thickness, and type in the case of generating images of handwritten digits.

Generative Adversarial Network (GAN) in TensorFlow Part 1 · Machine

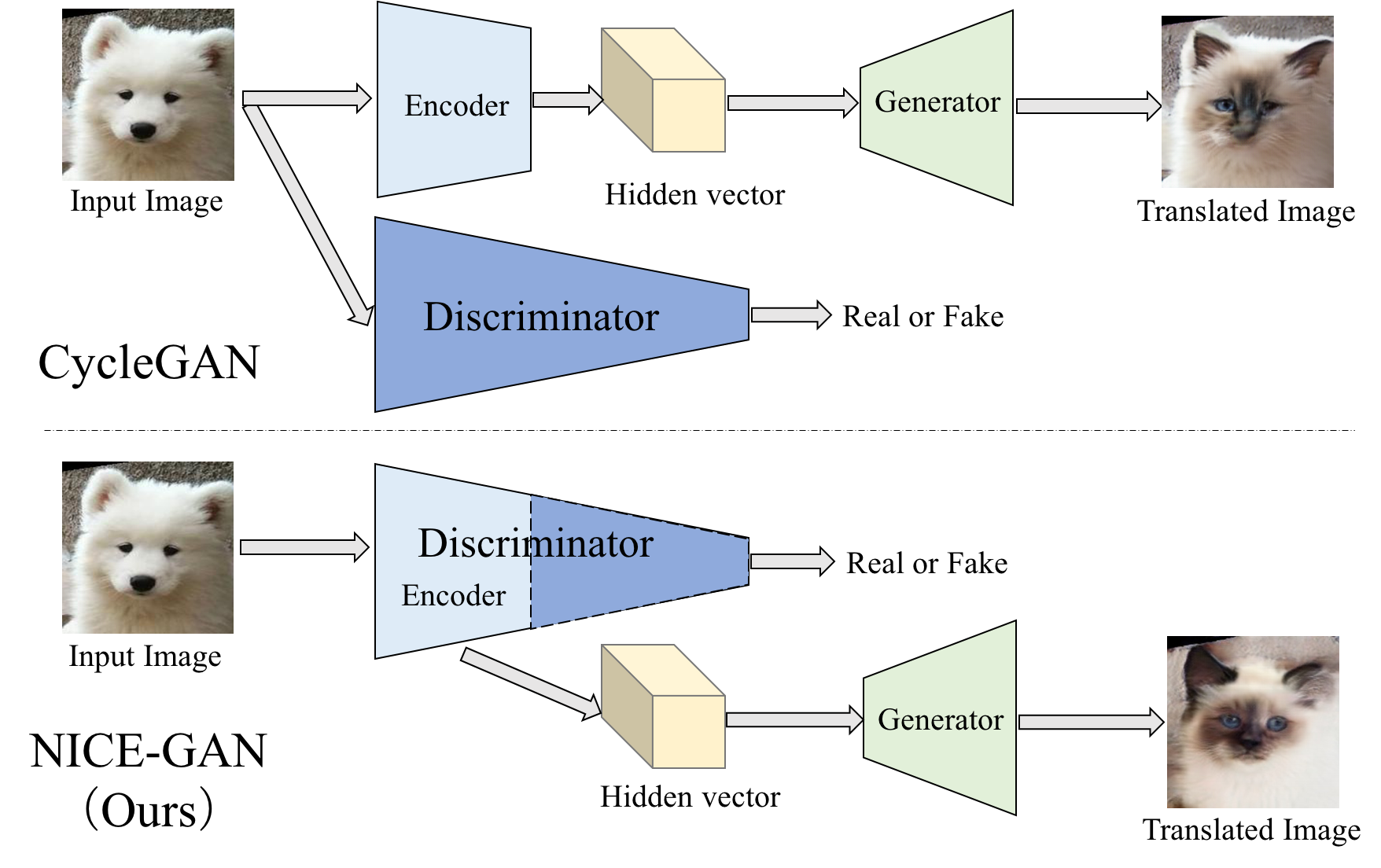

The main issue in NICE-GAN is the coupling of translation with discrimination along the encoder, which could incur training inconsistency when we play the min-max game via GAN. To tackle this issue, we develop a decoupled training strategy by which the encoder is only trained when maximizing the adversary loss while keeping frozen otherwise.

NICEGANpytorch/main.py at master · alpc91/NICEGANpytorch · GitHub

generator becomes G (z;c ). However, in standard GAN, the generator is free to ignore the additional latent code c by nding a solution satisfying P G (x jc) = P G (x ). To cope with the problem of trivial codes, we propose an information-theoretic regularization: there should be high mutual information

Architectures of GAN and InfoGAN. Download Scientific Diagram

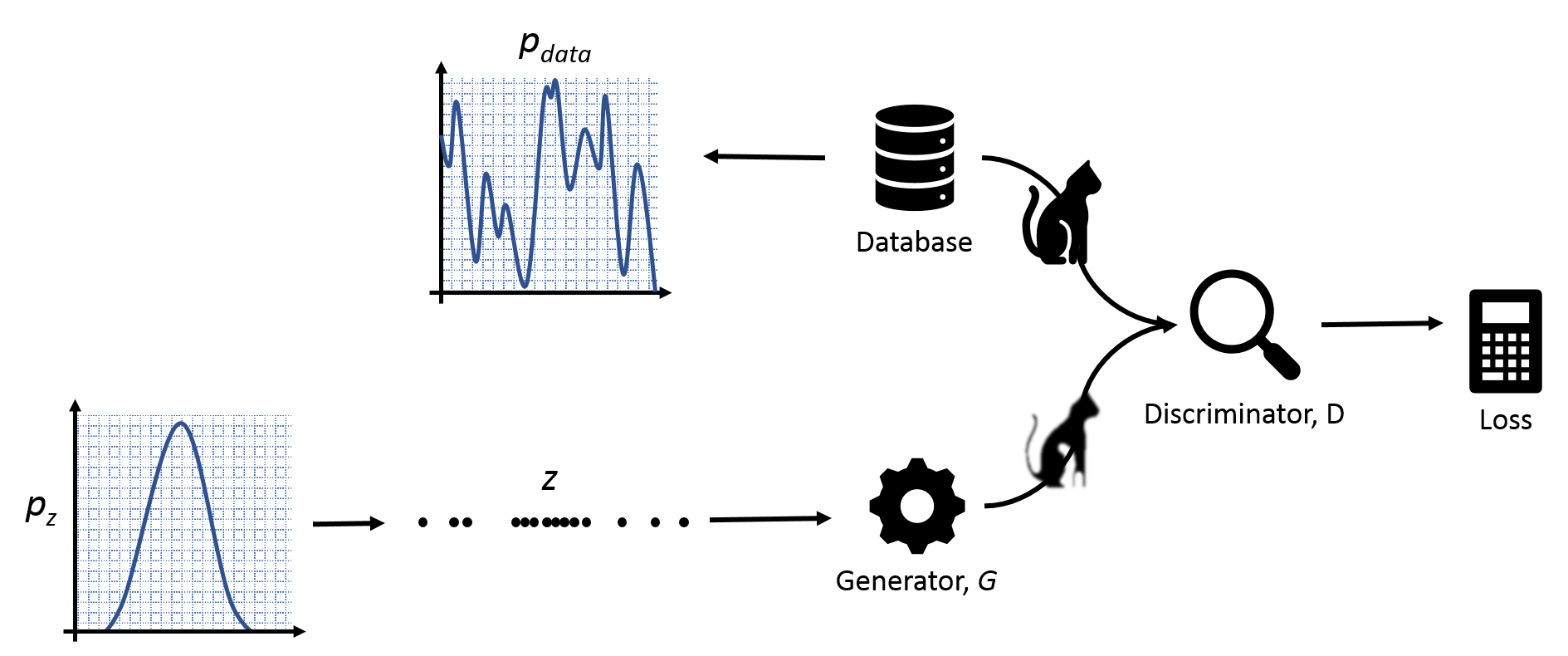

1. Introduction. A Generative Adversarial Network (GAN) emanates in the category of Machine Learning (ML) frameworks. These networks have acquired their inspiration from Ian Goodfellow and his colleagues based on noise contrastive estimation and used loss function used in present GAN (Grnarova et al., 2019).Actual working using GAN started in 2017 with human faces to adopt image enhancement.

Sejarah Perang Dunia Shinobi dan Lahirnya Para Legenda Shinobi Nice

Claude Shannon's 1948 paper defined the amount of information which can be transferred in a noisy channel in terms of power and bandwidth. A new research study conducted by Xi Chen and team proposes a GAN-styled neural network which uses information theory to learn "disentangled representations" in an unsupervised manner.

👉Ctrl+R on Twitter "RT erigostore Wah baru tau. Nice info gan 👍"

The approach is motivated from the information-theoretic point of view and is based on minimizing the mutual information between the latent codes and the generated images.. For completeness it would be nice to have V(D,G) formulated in terms of x,c,z: it is not 100% clear from equation (1).. However, in pure GAN the learning algorithm.

InfoGAN Interpretable Representation Learning by Information

The Structure of InfoGAN A normal GAN has two fundamental elements: a generator that accepts random noises and produces fake images, and a discriminator that accepts both fake and real images and identifies if the image is real or fake.

Dean nice info gan😂 emng istrinya ga marah guys?🤣🤣 tiktok

The 4-Port USB-C GaN Wall Charger declutters the workspace while charging laptops, tablets, phones, smart watches and earbuds simultaneously - no extra power bricks needed. It is compact and.

Stream gungbaster5 music Listen to songs, albums, playlists for free

InfoGAN is designed to maximize the mutual information between a small subset of the latent variables and the observation. A lower bound of the mutual information objective is derived that can.